Welcome Statement🧅

Welcome to Onionfarms. All races, ethnicities, religions. Gay, straight, bisexual. CIS or trans. It makes no difference to us. If you can rock with us, you are one of us. We are here for you and always will be.

Follow Onionfarms/Kenneth Erwin Engelhardt

Onionfarms Merchandise(Stripe Verified): Onionmart

Onionfarms.net (Our social network site powered by Humhub).Register and up your own little space to chat and hang out in.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Kiwifarms Gossip & Slap Fights Lol look at this faggot- OF edition

- Thread starter Yandere

- Start date

-

- Tags

- cringe kiwi farms null

These threads cover general gossip and interacting with Kiwifarms (openly calling them out).

Life is ruff

Hellovan Onion

Almost all of this site currently or used to use KF. It's be expected.lol you're all gay

cjöcker

Remarkable Onion

anyone who replies '[word for shit] take' to any opinion or says 'hot take' is automatically a subhuman in my eyesBrainlet take

fecal Jew takeanyone who replies '[word for shit] take' to any opinion or says 'hot take' is automatically a subhuman in my eyes

cjöcker

Remarkable Onion

Eat shit, janny.

Azusa

Remarkable Onion

Sounds like something you could set up with some Selenium scripting pretty easily. And I'm sure Null has no idea about any of the XF functions tbhHell, I've thought of getting a bot up and running that does nothing except mass-negrating people's posts. I do believe there is a XF function that can retract all reactions given by a specific user which would of course invalidate it, but, done carefully, the idea would be very effective and wouldn't get them deleted.

cjöcker

Remarkable Onion

It's a simple post request. All you need is to send the auth, csrf and session cookies to the reaction URL. I think XF has a rate limit of a couple of seconds but I can't be bothered to figure it out.Sounds like something you could set up with some Selenium scripting pretty easily. And I'm sure Null has no idea about any of the XF functions tbh

<forum url>/posts/<post id>/react?reaction_id=<reaction id>But lets say if someone theoretically did this here are the IDs of all the negrates

Name | ID |

| Dislike | 14 |

| Deviant | 27 |

| Islamic Content | 30 |

| TMI | 29 |

| Dumb | 17 |

| Late | 11 |

| Mad at the Internet | 16 |

post-<post ID> and a data-author attribute containing the author's username) and then theoretically send a HTTP request to the url I mentioned above with their auth, session and CSRF cookie and therefore adding a negative reating to this user's post.I am not at all encouraging anyone to do this.

Negrate every single post on the site.It's a simple post request. All you need is to send the auth, csrf and session cookies to the reaction URL. I think XF has a rate limit of a couple of seconds but I can't be bothered to figure it out.

<forum url>/posts/<post id>/react?reaction_id=<reaction id>

But lets say if someone theoretically did this here are the IDs of all the negrates

So someone could theoretically make a script which theoretically parses the page to negrate certain users's posts (the posts are contained in an article tag and in the article tag there's a data-content attribute containing the post ID formatted like

Dislike 14 Deviant 27 Islamic Content 30 TMI 29 Dumb 17 Late 11 Mad at the Internet 16 post-<post ID>and a data-author attribute containing the author's username) and then theoretically send a HTTP request to the url I mentioned above with their auth, session and CSRF cookie and therefore adding a negative reating to this user's post.

I am not at all encouraging anyone to do this.

Azusa

Remarkable Onion

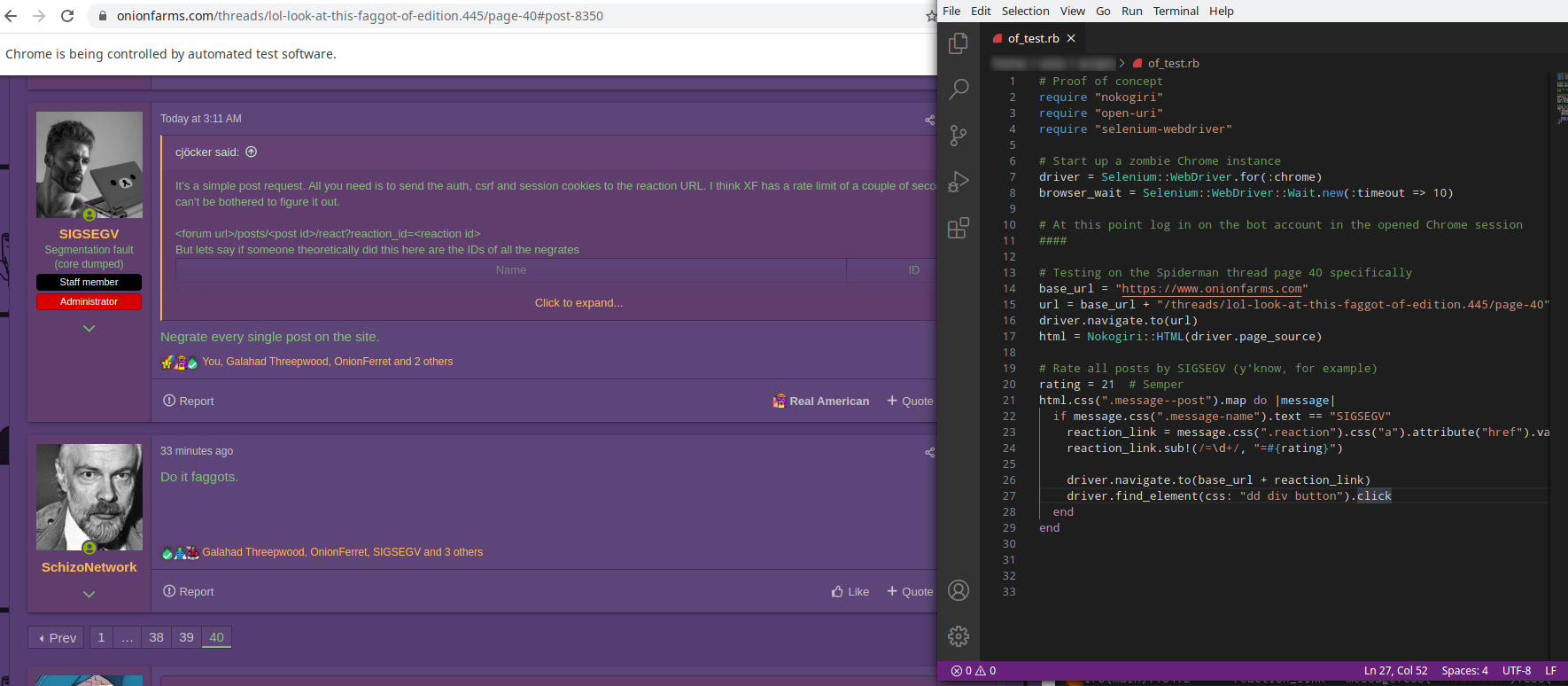

Donezo. (As a proof of concept, anyway).

Ruby:

# Proof of concept

require "nokogiri"

require "open-uri"

require "selenium-webdriver"

# Start up a zombie Chrome instance

driver = Selenium::WebDriver.for(:chrome)

browser_wait = Selenium::WebDriver::Wait.new(:timeout => 10)

# At this point log in on the bot account in the opened Chrome session

####

# Testing on the Spiderman thread page 40 specifically

base_url = "https://www.onionfarms.com"

url = base_url + "/threads/lol-look-at-this-faggot-of-edition.445/page-40"

driver.navigate.to(url)

html = Nokogiri::HTML(driver.page_source)

# Rate all posts by SIGSEGV (y'know, for example)

rating = 21 # Semper

html.css(".message--post").map do |message|

if message.css(".message-name").text == "SIGSEGV"

reaction_link = message.css(".reaction").css("a").attribute("href").value

reaction_link.sub!(/=\d+/, "=#{rating}")

driver.navigate.to(base_url + reaction_link)

driver.find_element(css: "dd div button").click

end

endNo one man should be allowed to have such power.Donezo. (As a proof of concept, anyway).

View attachment 2773

Ruby:# Proof of concept require "nokogiri" require "open-uri" require "selenium-webdriver" # Start up a zombie Chrome instance driver = Selenium::WebDriver.for(:chrome) browser_wait = Selenium::WebDriver::Wait.new(:timeout => 10) # At this point log in on the bot account in the opened Chrome session #### # Testing on the Spiderman thread page 40 specifically base_url = "https://www.onionfarms.com" url = base_url + "/threads/lol-look-at-this-faggot-of-edition.445/page-40" driver.navigate.to(url) html = Nokogiri::HTML(driver.page_source) # Rate all posts by SIGSEGV (y'know, for example) rating = 21 # Semper html.css(".message--post").map do |message| if message.css(".message-name").text == "SIGSEGV" reaction_link = message.css(".reaction").css("a").attribute("href").value reaction_link.sub!(/=\d+/, "=#{rating}") driver.navigate.to(base_url + reaction_link) driver.find_element(css: "dd div button").click end end

Bubbly Sink

Registered Member

you're a big fat niggerFrom "Biting the hand that feeds":

View attachment 566

View attachment 565

View attachment 564

He also made a post in the thread to gravedance, but unfortunately @Bubbly Sink is a cowardly faggot and deleted the post by the time I went back to screenshot it. This is especially hilarious because he tried to act all buddy buddy on my profile multiple times. Can you guess what he did almost immediately after making an account on Onion Farms?

View attachment 567

View attachment 568

Imagine my fucking shock.

Azusa

Remarkable Onion

So what I coded there works specifically for page 40 of this thread. The next step (i.e. when I'm not at work or being lazy) would be to generalize it so that it grabs all the urls for threads on Onion Farms (which we can get with the sitemap!), gets all of the pages for each thread (some simple HTML scraping I think, or in the worst case we can just use the zombie Chrome with Selenium, get the number here: e.g.No one man should be allowed to have such power.

and then just manually generate the page list. Once we've got a list of urls we just loop over all of them using that code I wrote earlier to check each page's messages for the user's messages and rate them however we want.

Kiwi Farms works exactly the same way. Y'know, hypothetically (I disavow, etc).

What if I just want to rate every single accessible post on the site autistic?So what I coded there works specifically for page 40 of this thread. The next step (i.e. when I'm not at work or being lazy) would be to generalize it so that it grabs all the urls for threads on Onion Farms (which we can get with the sitemap!), gets all of the pages for each thread (some simple HTML scraping I think, or in the worst case we can just use the zombie Chrome with Selenium, get the number here: e.g.

View attachment 2811

and then just manually generate the page list. Once we've got a list of urls we just loop over all of them using that code I wrote earlier to check each page's messages for the user's messages and rate them however we want.

Kiwi Farms works exactly the same way. Y'know, hypothetically (I disavow, etc).

Azusa

Remarkable Onion

Just remove lines 22 and 28 in that case.What if I just want to rate every single accessible post on the site autistic?

EDIT: To add, the hiccup with doing something like this is that the entire thing stalls if you run into one of these (as I did before):

The 'benefit' of using Selenium rather than just passing html requests around is that you always have mouse/keyboard access to the zombie Chrome instance, so whenever you hit a captcha you can jump in and manually fill it out, and then the script resumes right where it left off.

Last edited:

lol calm down.

You're acting even more shitter-shattered than zed was

If butthurt speds are going to whiteknight a tremendous faggot I cannot promise not to stir the pot

Noghoul

An Onion Among Onions

Life is ruff

Hellovan Onion

"the world's greatest tard museum"

Is he talking about the lolcows or KF's userbase?

Similar threads

- Replies

- 13

- Views

- 448

Kiwifarms Linked

Haley Joy Thomas/JambledUpWords/redoniblueoni/Numerous Other Socks

- Replies

- 212

- Views

- 10K

- Replies

- 75

- Views

- 3K

Kiwifarms Gossip & Slap Fights

Freya's misdeeds and hazing

- Replies

- 855

- Views

- 25K